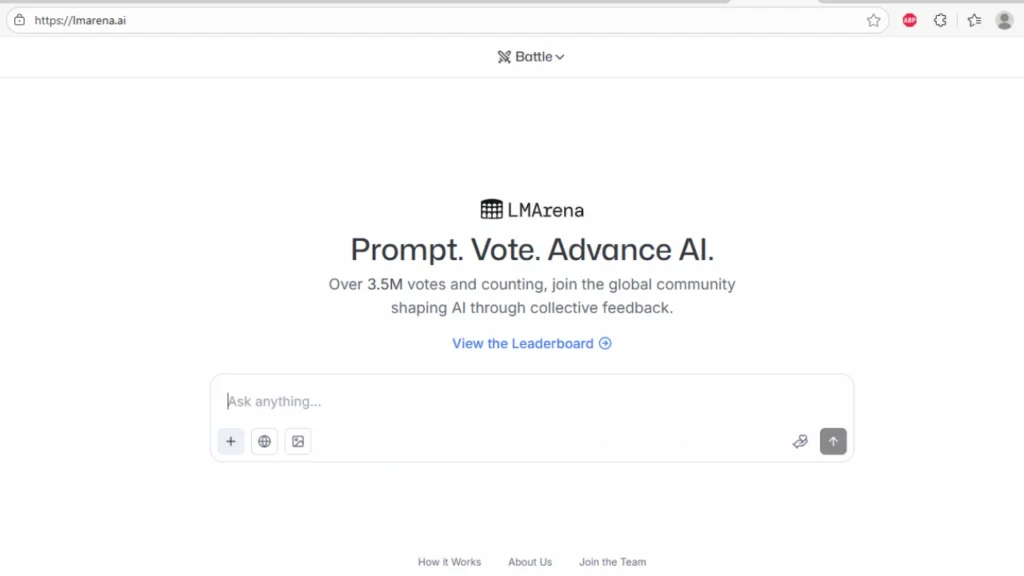

LMArena lets you test AI models anonymously global votes decide the winner, building open leaderboards that reflect real user preferences

LMArena is a web-based platform that allows anyone to compare AI models through anonymous battles. Instead of relying on lab benchmarks, it gathers votes from real users, shaping a transparent leaderboard that reflects everyday performance. With millions of votes, industry partnerships, and growing recognition, LMArena is becoming one of the most influential tools in evaluating how artificial intelligence actually works for people.

What is LMArena

LMArena, previously known as Chatbot Arena or LMSYS, was launched in 2023 as an initiative under UC Berkeley’s SkyLab. It is designed to compare large language models in real-world use cases. The goal is to move beyond technical benchmark scores and capture how people actually experience AI models in everyday tasks.

Since its launch, it has become a trusted name in the AI ecosystem. Backed by $100 million in funding by 2025 from investors such as Andreessen Horowitz and Lightspeed, the platform now plays a key role in shaping how both open-source and commercial models are evaluated. Companies like OpenAI, Google, and Anthropic regularly test their models here, including pre-release versions that later make headlines.

Key Features and How It Works

LMArena has introduced a simple but effective way to compare AI performance:

- Anonymous model battles where users don’t know which AI gave which answer until they vote

- A voting system with options such as A is better, B is better, Tie, or Both bad

- Leaderboards that update dynamically as millions of votes are cast

- A prompt input system where users can test models across text, images, and multimodal tasks

- Model identity revealed after voting so users can continue chatting if they want

- Open datasets that give researchers access to millions of real user preference results

- Domain-focused tracks like BiomedArena.AI, designed for biomedical research

This method ensures that comparisons are fair, community-driven, and highly relevant for real-world AI usage.

Why It Matters

Traditional AI benchmarks often test narrow scenarios that don’t reflect how people use AI in daily life. LM Arena changes this by putting human judgment at the center.

- It captures what people actually prefer in real conversations

- Over 3.5 million votes make it one of the largest human feedback systems for AI

- Major labs use the platform to fine-tune and validate their models

- Research papers cite LMArena’s datasets as a foundation for understanding user needs

For the broader AI community, this means rankings are based on actual experience, not just technical benchmarks.

Pros and Cons of LMArena

Pros

- Transparent, open, and community-driven

- Supports text, image, and multimodal testing

- Provides free access to valuable datasets

- Reflects real-world human preferences

Cons

- Proprietary models may dominate due to higher testing volume

- Risk of companies testing multiple versions privately before release

- Leaderboards are simplified and may not capture nuanced performance differences

While these concerns exist, LMArena’s commitment to openness and acknowledgment of limitations make it more credible than closed systems.

Who Should Use LMArena

LMArena is valuable for different types of users:

- Researchers looking for access to human preference datasets

- Developers who want to test models before public release

- Students and enthusiasts who want to explore how different AIs respond

- Industry professionals tracking the evolution of models like GPT, Gemini, and Claude

In short, if you want to see how AI models perform in practice rather than theory, LMArena is the place to go.

FAQ Section

Q1: Is LMArena more reliable than lab benchmarks?

Yes, because it reflects real user preferences instead of narrow test scores. However, it may still show bias if commercial models appear more often.

Q2: Do I need to pay to use LMArena?

No. The platform is free and open for anyone to submit prompts, vote in battles, and view leaderboards.

Q3: Which companies test their models on LMArena?

Top AI labs including OpenAI, Google DeepMind, and Anthropic have tested pre-release and proprietary models on LMArena, making it an industry-wide standard for evaluation.