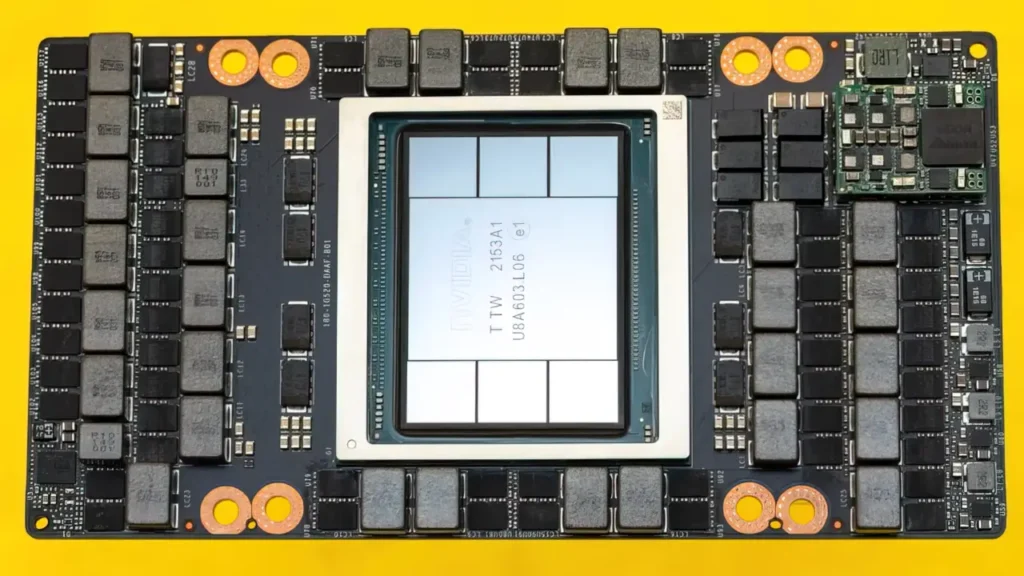

The NVIDIA H100 GPU provides up to 30 times quicker AI inference and 9 times faster training, making it the go-to powerhouse for large language models and high-performance computing.

The NVIDIA H100 GPU is a ground-breaking AI accelerator based on Hopper architecture, intended to power the next wave of deep learning and high-performance computing. The H100, which contains 80 billion transistors, sophisticated tensor cores, and HBM3 memory, promises to train and deploy huge AI models at record-breaking speeds and efficiency.

What is the NVIDIA H100 GPU

The NVIDIA H100 is a ninth-generation data center GPU designed to beat its predecessor, the A100, by a large margin. Based on the Hopper design, it includes 80 billion transistors, TSMC’s breakthrough 4N technology, and up to 132 streaming multiprocessors. The 814 mm² die size and cutting-edge design mark a significant advancement in AI and HPC computing technology.

It specializes in supporting workloads such as large language models (LLMs), scientific computing, natural language processing, computer vision, and generative AI platforms. From research labs to enterprise deployments, the H100 offers the scalability and raw performance required to handle next-generation AI models.

Key Features of NVIDIA H100

The H100 introduces industry-first technologies to manage high workloads:

- Transformer Engine: Special hardware designed to accelerate transformer-based models, critical for LLMs such as GPT. It supports FP8 precision for 9x faster training and up to 30x faster inference compared to A100.

- Fourth-Gen Tensor Cores: Deliver 2–4x compute throughput with support for FP8, FP16, BF16, TF32, FP64, and INT8.

- Multi-Instance GPU (MIG): Partition a single GPU into 7 isolated instances with Confidential Computing for secure multi-tenant usage.

- Fourth-Gen NVLink: Delivers 900 GB/s bandwidth for seamless GPU-to-GPU communication, enabling clusters of up to 256 GPUs.

- HBM3 Memory: 80 GB of HBM3 with up to 3 TB/s bandwidth ensures faster access for AI and HPC workloads.

- Confidential Computing: First GPU with hardware-level trusted execution, securing sensitive workloads like federated learning.

- DPX Instructions: Speeds up genomics and routing algorithms by up to 7x over A100.

Why NVIDIA H100 Matters

The H100 is more than simply raw specifications—it’s about practical breakthroughs.

- LLM Acceleration: Capable of generating 250–300 tokens/sec on 13B–70B models, nearly doubling A100 performance.

- Enterprise AI: Cuts training times for GPT-3 scale models from weeks to days.

- HPC Performance: Offers 3x improvement in scientific simulations and high-precision floating-point performance.

- Energy Efficiency: Despite higher TDP (up to 700W), it delivers superior performance-per-watt compared to A100.

- Scalability: With DGX SuperPOD configurations, organizations can scale to exaFLOP-level AI performance.

Who Should Use the H100

The NVIDIA H100 is designed for:

- AI researchers and startups training large models.

- Enterprises running mission-critical AI workloads.

- Cloud providers offering GPU-as-a-service.

- HPC organizations in genomics, drug discovery, and scientific computing.

- Developers working on computer vision, generative AI, or robotics at scale.

For those who are still using A100, switching to H100 can save money by lowering training time and infrastructure requirements while enabling advanced security.

NVIDIA H100 vs A100

The H100 represents a massive generational leap over the A100:

- Performance: 9x faster training, 30x faster inference.

- Memory: HBM3 (3 TB/s) vs A100’s HBM2e (2 TB/s).

- Precision: FP8 support unique to H100.

- Scalability: Larger NVLink bandwidth enabling clusters of 256 GPUs.

- Security: Confidential Computing makes H100 cloud-ready with zero-trust security.

H100’s speed and versatility make it unrivaled for organizations running LLMs, autonomous vehicles, or medical AI.

FAQ Section

Q: How much faster is NVIDIA H100 compared to A100?

A: The H100 delivers up to 9x faster training and 30x faster inference, with advanced FP8 support and HBM3 memory bandwidth reaching 3 TB/s.

Q: Does the NVIDIA H100 support Confidential Computing?

A: Yes, it is the world’s first GPU with built-in Confidential Computing, securing sensitive data and workloads for multi-tenant cloud deployments.

Q: What are the main use cases of NVIDIA H100?

A: It powers LLM training, scientific HPC, computer vision, autonomous driving, genomics, fraud detection, and generative AI platforms.