Google OPAL might provide the missing intermediate layer for AI developers who have to deal with models, frameworks, and APIs that change quickly. OPAL isn’t just another chatbot or cool model; it’s a platform for developers that makes designing, debugging, and deploying AI apps faster, more modular, and less frustrating.

We’ll talk about what Google OPAL is, why it’s made for developers and not just users, how it fits into Google Labs’ vision, and what makes it distinct from Gemini and other AI tools in this blog.

What is Google OPAL and Why It Matters

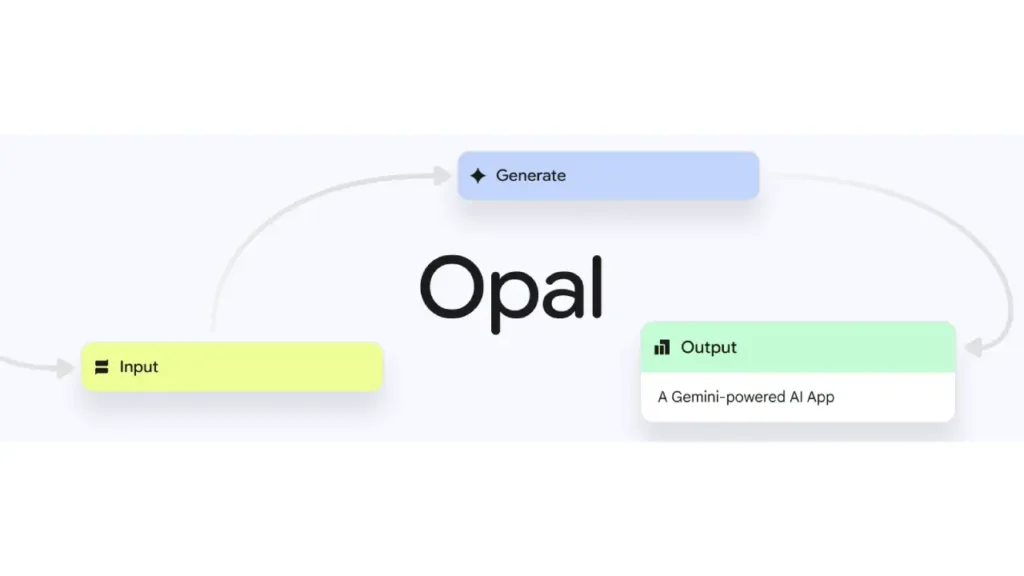

Google Labs is working on OPAL (Open Programming with Advanced LLMs), which is a project to develop an open, modular, and programmable interface on top of large language models (LLMs). Gemini and other tools focus on how users engage with AI, whereas OPAL is for engineers who want to have complete control over how AI works.

“Think of OPAL as your AI command center. You don’t just ask an LLM for help; you also design how that help should look, act, and change over time.”

Google found that LLMs are fantastic at understanding natural language, but they don’t always perform well in organized processes, are hard to repeat, and aren’t always safe, especially when you’re building anything for production. That’s where OPAL shines.

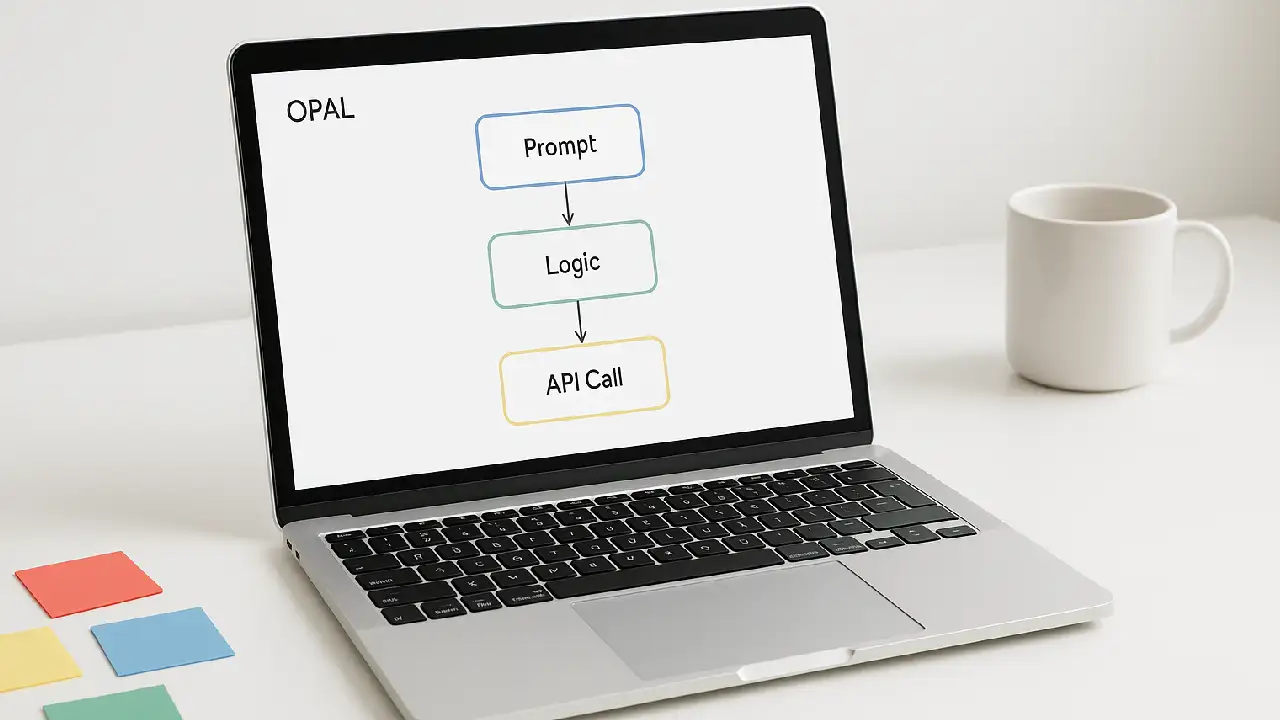

How Google OPAL Works

At its core, OPAL is made up of bricks that may be combined, reused, and debugged. You write “programs” that are made up of smaller instructions that can:

- Call LLMs with structured prompts

- Chain outputs into logic-based flows

- Access memory, tools, and APIs

- Include human feedback or safety guards

It’s like giving your LLM a tiny brain and a checklist.

Key Features That Make OPAL a Dev Favorite

Here’s why AI devs are paying attention to Google OPAL

- Modular AI Programs

Instead of one-shot prompts, you create logic-based programs using reusable components - Better Debugging Tools

See exactly how the LLM responded at each step — critical for dev and prod - Safety First

Inject guardrails, validation, or fallback logic within your LLM-powered apps - Built for Tools and APIs

Native support to integrate external APIs, search engines, databases, or even call other models - Local Dev + Cloud Push

Prototype fast locally, and deploy to scalable environments when ready

Pros and Cons of Using OPAL

| Feature | Pros | Cons |

|---|---|---|

| Modular programming | Better control, reuse, and testing | Steeper learning curve than ChatGPT-style tools |

| Debugging + Safety features | Ideal for production apps with high-stakes logic | More engineering overhead |

| Developer-focused APIs | Fast integration with external tools and pipelines | Still in experimental Labs phase |

| Part of Google Labs ecosystem | Easy tie-ins with Gemini, Vertex AI, and other products | Less mature than LangChain or OpenInterpreter |

Use Cases AI Devs Will Love

OPAL isn’t trying to be everything for everyone. It’s laser-focused on programmable AI workflows. Use cases include

- Building LLM-powered internal tools for customer support, HR, finance

- Creating multi-step AI agents that require reasoning and external data calls

- Embedding structured AI assistants into SaaS apps or developer platforms

- Developing safe-by-design AI features in healthtech, fintech, or govtech

How Google OPAL Fits into Google Labs’ AI Strategy

Google Labs is the company’s playground for bleeding-edge experiments. Gemini is the headline act, but OPAL is the dev toolkit behind the curtains. It complements:

- Gemini models – by giving developers structure around raw LLM output

- AI Studio – for experimenting and visualizing prompt chains

- Vertex AI – where production-grade apps go to scale

“OPAL shows Google is serious about making AI usable not just for end users, but for builders — the ones who turn language into products.”

Takeaway Box

- Google OPAL is made for developers building serious AI tools

- It simplifies debugging, logic flows, and integration with APIs

- Ideal for creating safe, multi-step, LLM-driven apps

- Lives inside Google Labs, works alongside Gemini and Vertex AI

Conclusion

Google OPAL is not just another cool AI toy. It’s a basic tool that adds engineering discipline to LLM procedures. OPAL gives you the kind of programmatic control that Gemini or ChatGPT can’t give you on their own, whether you’re a solo developer or part of a team that is scaling AI systems in production.

Google’s OPAL is still in its early phases, but for AI developers, it seems like the start of a much-needed change from “magical black boxes” to structured, testable AI systems.

Would you use OPAL to develop your next AI app? Tell us.